Chris Julien

- Biography

Chris Julien works as research director at Waag. As a member of the management team he is responsible for the collective research agenda and methodology of the Institution. Besides this he is one of the spokespersons for the institution and as such maintains Waag’s international research network.

His ability to translate philosophical vistas into concrete projects makes him a core playmaker of the institution. A long-term focus on interdisciplinary approaches in academic and societal context shape the new Public Research agenda of Waag. In it, individuals as ethical actors are placed centre-stage throughout the entire chain of research, design and implementation of social innovation.

Before his role at Waag, Chris worked as finance director of the Studio 80 foundation, co-founded Novel Creative Consultancy and research new ways of working at FreedomLab. At this time he is a committee member at Creative Industries Fund NL, chairman of the GroenLinks committee on arts & culture in Amsterdam, and a regular fixture at Red Light Radio with his POP CTRL shows.

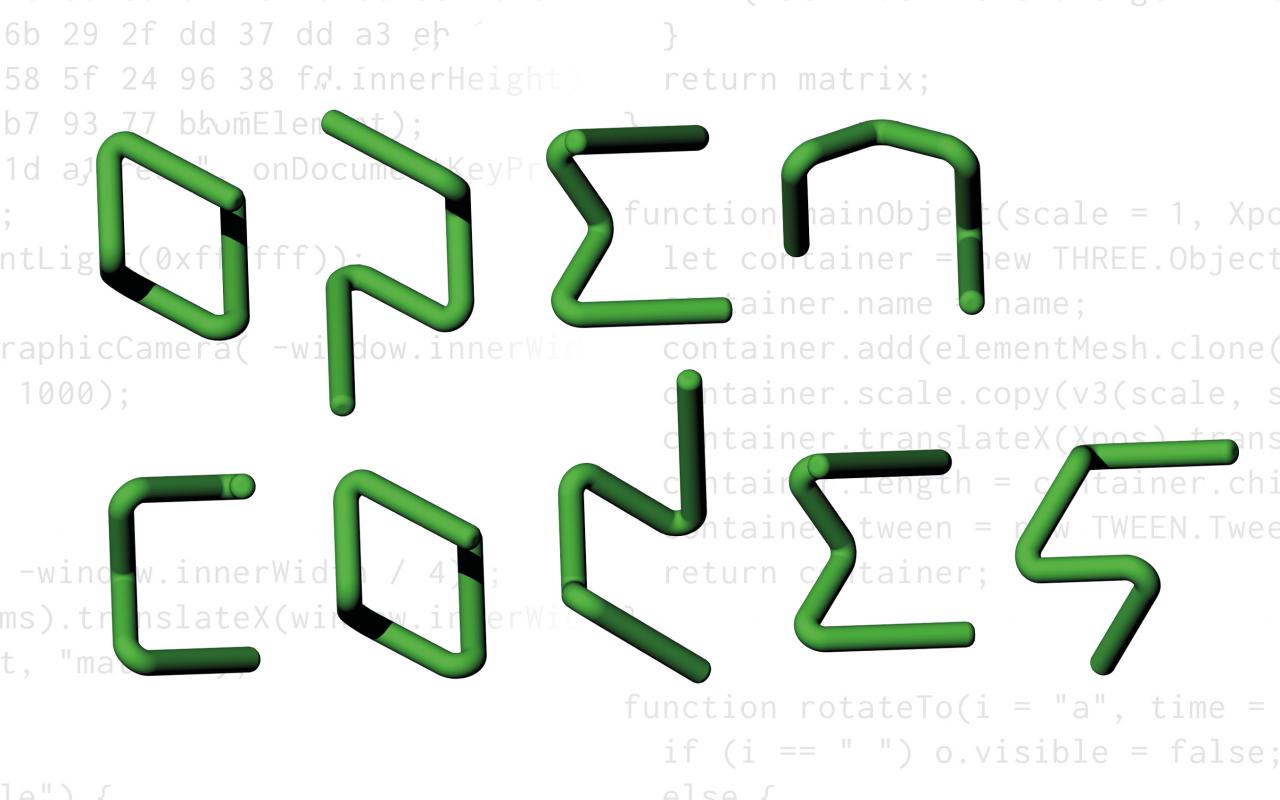

Biased-by-default: AI Culture lab @ Waag, Amsterdam – A Presentation for the Open Conference »Art and Artificial Intelligence«

In 1984, Donna Haraway famously stated in her Cyborg Manifesto that »Technology is not neutral.« As we enter the Anthropocene thirty-five years on, her point becomes more pertinent than ever. Is it actually possible to be neutral, or was the possibility of such a cool, distanced view merely a modern fantasy, conjured up by the European man during his ongoing ‘discovery’ and conquest of the globe?

As Haraway continued, »We’re inside what we make and it is inside of us.« The smooth borders between technology and society, between nature and culture are crumbling, and we are finding ourselves collectively part of the situation we once thought to look upon and control. If the notion of neutrality has become untenable, then how should we understand our actual positions? What does it mean to be constitutively not-neutral? Haraway continues: »We're living in a world of connections — and it matters which ones get made and unmade.«

This shift of perspective – from ‘on’ to ‘in’ the world, has far-reaching ethical and epistemological consequences. We’ve lost access to a meta-position, and necessarily need to describe our position in relation to, and therefore from within any phenomenon under consideration. Curiously, Artificial Intelligence shines a contemporary light on what this position of not-neutrality entails.

Rather than reaffirming the smooth and objectively accessible reality that came so naturally to our modern sensibilities, these ‘culture machines’ give us with a clear look at the cultural entanglements at the roots of our technologies. From the perspective of algorithmic computation, not-neutral is commonly defined as ‘bias’. Rather than a ‘bug’ in our shiny new systems of optimization that will be designed-away in future iterations of the technology, bias seems very similar to the cat passing Neo and his band as he transverses his once-familiar world in the Matrix. Not so much a small glitch in the system, as a moment in which the system reveals its material entanglement. Bias isn’t a bug of algorithmic systems but rather reveals the constitutive role of the human as a cultural agent in the functioning of these systems. Developing our understanding the culturally entangled roots of AI technologies is the core research focus of the AI Culture lab, which I will continue to describe during my presentation at ZKM.